Recurrent Neural Network

Recurrent meaning- 'occurring often or repeatedly'. So what is actually repeating is the next question? If you are also in the quest for the same then this article is for you. My journey of machine learning was going fine and then suddenly I heard of the word Recurrent Neural Network in short RNN. And it took me a hours of Internet search, Paper reading, Video Lecture and a lot of coffee to decode RNN.

Why need for RNN?

Up till now in classical Neural Networks unknowingly we were dealing with data which whose individual components were not dependent on each other or in another words were not in a 'Sequence'. For eg:- In house prediction regression model the data-set may contain feature like Area,Population,Crime-Rate etc... and you may choose the best feature to predict the price of the house. But the point to noted here is that we are not concerned about the sequence of occurrence of these data samples while training.

So when our neural network's Bias and Weight will be getting trained, Row number 3 will not have any direct influence on the adjustment of the Bias and Weight done by Row number 4.

But consider a use case of language translation machine learning model using classical neural network. when an English sentence need to be translated into Chinese the context of the word need to maintained otherwise the meaning of the sentence can be changed if we simply replace character by character translation of the sentences.

A classical neural network will translate 'my name is Lee' to 'Wo de Mingcheng Shi Beifeng chu' but actual translation is different. This is because over neural network does not know any context or meaning which is hidden in the sequence and placement of words within a sentence. Thanks to Mr. John Hopfield who came to rescue.

Similarly "my name is lee" translation will depend on the words "my","name" and "is". To achieve this thing mathematically there is a trick involved in it. From our previous knowledge of ANN perceptron model

Y= W*X + B

weight W and bias B is adjusted by the current value of X independent of each other.

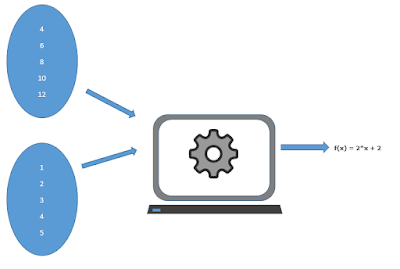

In RNN first of all we need to maintain 3 different weight Matrix V, U and W. The above diagram shows a RNN being unrolled (or unfolded) into a full network. By unrolling we simply mean that we write out the network for the complete sequence. For example, if the sequence we care about is a sentence of 4 words, the network would be unrolled into a 4-layer neural network, one layer for each word. The formulas that govern the computation happening in a RNN are as follows:

Hidden state Equation for RNN is of the form

and if we compare it with our ANN equation Y= W*X + B there is an additional term W* S(t-1) which is basically the memory that is keeping track of what is already been happened up till the sequence at time t-1 and to the surprise this is that term which make RNN repetitive in nature.

Unlike a traditional deep neural network, which uses different parameters at each layer, a RNN shares the same parameters (U.V and W above) across all steps. This reflects the fact that we are performing the same task at each step, just with different inputs. This greatly reduces the total number of parameters we need to learn.

One more thing to remember is that it is not necessary to have input/output at each is time step. For example, when translating 4 English word to 3 chinese word we need 4 inputs and 3 outputs. The main feature of an RNN is its hidden state, which captures some information about a sequence.

So to conclude in the end, RNN are used in variety of machine learning problem that deals with sequential data like

- Language Translation

- Speech Recognitiion

- Generating Image Description

- Natural Language Processing